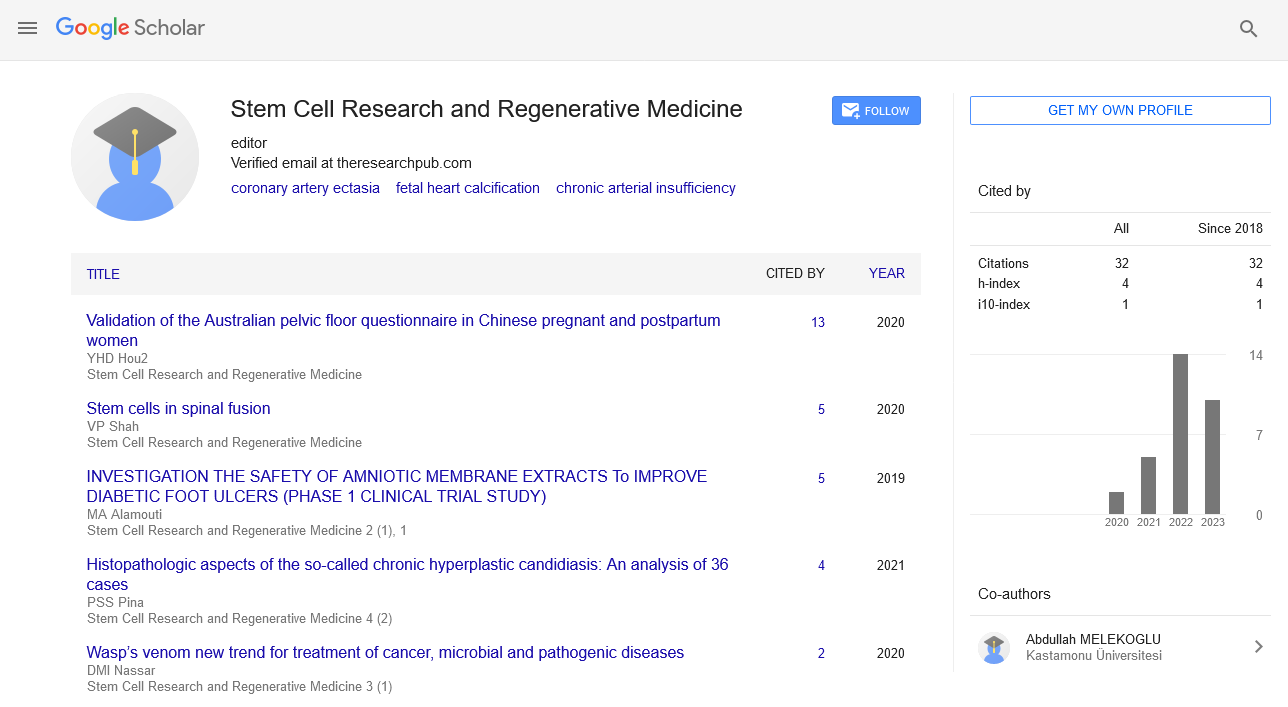

Mini Review - Stem Cell Research and Regenerative Medicine (2023) Volume 6, Issue 2

Ocular Channel Picture Integrity's Effect on Ocular Vessel Partitioning

Dr. Tausif Khan*

Department of Stem Cell and Research, Fiji

Department of Stem Cell and Research, Fiji

E-mail: khantausif123@gmail.com

Received: 01-Apr-2023, Manuscript No. srrm-23-95874; Editor assigned: 04-Apr-2023, Pre-QC No. srrm-23- 95874 (PQ); Reviewed: 18-Apr-2023, QC No. srrm-23-95874; Revised: 22- Apr-2023, Manuscript No. srrm-23- 95874 (R); Published: 28-Apr-2023, DOI: 10.37532/srrm.2023.6(2).32-34

Abstract

Ocular vessel segmentation is important for the detection of Ocular vessels in various eye diseases, and a consistent computational method is required for automated eye disease screening. Although many methods have been implemented to segment Ocular vessels, these methods have only gained accuracy and lack of good sensitivity due to the consistency of segmentation of Ocular vessels. Another major cause of low sensitivity is proper techniques for handling the problem of low contrast variations. In this study, we proposed his five-step method to assess the effect of Ocular vascular coherence on Ocular vascular segmentation. The technique proposed for Ocular vessels includes four steps, known as preprocessing modules. These four stages of the preprocessing module deal with Ocular image processing in the first stage, morphological operations used in the second stage to deal with non-uniform illumination and noise problems, and principal component analysis in the third stage. Convert the image to grayscale using (PCA). The fourth step is to use anisotropic diffusion filtering and test its various schemes to obtain better coherent images with the optimized anisotropic diffusion filtering, thereby improving the coherence of the Ocular vessels. It is the main step that contributes to the final step included double thresholding with a morphological image reconstruction technique to generate segmented images of vessels. The performance of the proposed method is verified on publicly accessible databases called DRIVE and STARE. The sensitivity values of 0.811 and 0.821 for STARE and DRIVE are comparable or superior to other existing methods and the comparable accuracy values for STARE and DRIVE databases using existing methods are 0.961 and 0.954. This proposed new method of segmenting Ocular vessels can help medical professionals diagnose ocular diseases and recommend timely treatments.

Keywords

Ocular fundus image • Fundus photography • Segmentation • Coherence • Optimized anisotropic diffusion filtering • Vessel binary image

Introduction

The most common eye diseases include age-related macular degeneration, glaucoma, and diabetic retinopathy (DR). These disorders are primarily caused by blood vessels in the photosensitive membrane known as the retina. In particular, rapid progression of DR can be fatal and lead to permanent vision loss due to two main factors [1]. Hyperglycemia and hypertension global statistics estimate that by 2030, 30 million people worldwide will be affected by DR. Meanwhile, macular degeneration is one of the leading causes of blindness in developed countries. Macular degeneration affects approximately 1 in 7 people over the age of 50 in developed countries [2]. Simply put, if the eye condition is not treated, it can lead to serious complications such as sudden vision loss.To avoid serious eye disease, early detection, treatment and consultation with an ophthalmologist are essential. It is important. It was recently documented that early detection of the disease and prompt treatment with appropriate follow-up procedures could prevent 95% of blindness. To this end, one computerized technique for identifying these progressive diseases is to analyze Ocular images. The fundus camera has two operating configurations [3].

Fundus fluorescein angiography (FFA) and digital color fundus imaging. In the FFA configuration, the patient’s nerve is injected with fluorescein, a liquid that improves visibility when exposed to ultraviolet light. The path of ultraviolet light through blood vessels is brightened, making it easier to study blood flow in the Ocular vascular network. This creates a high-contrast image, providing a better view for the expert ophthalmologist to analyze the blood vessels. However, configuring FFA is time consuming and difficult for specialists to provide timely analysis for expedited processing, slowing the process [4]. The construction of digital color fundus images includes a computerassisted procedure to automatically perform segmentation. It has the ability to reduce manual labor. At the same time, the cost of the inspection process is also reduced. Reliable vessel segmentation is a challenging process, and computerized processes based on analysis of color fundus images enable rapid analysis and processing [5].

The research goal of this work is to evaluate the effect of vessel contrast on Ocular vessel segmentation. Analysis of Ocular background color images is a challenging task due to different minimal contrasts and irregular illumination of blood vessels against the background [6]. This method can be linked to the FFA analysis process and the effects of FFA can be reduced by using contrast normalization filtering such as the image coherence method. The proposed method involved several steps. In the first stage, the color image of the Ocular fundus is converted into his three he-she RGB channels (red, green, blue) [7]. In the second stage, the mixed morphological technique was used to remove uneven lighting and noise. The third level is based on a new PCA technique for obtaining good grayscale images. However, the fourth stage contains the main contribution of this research work, as vessels are not always adequately coherent. Sufficiently coherent images were obtained using various anisotropically oriented diffusion filtering schemes. The fifth stage involves post-processing to create wellsegmented images based on the proposed image restoration method [8].

Material and Method

Ocular Image to RGB channel conversion

A fundus camera is used for fundus photography, which widens the angle of view inside the retina with the help of a lens. A fundus camera, used to image the interior of the eye, consists of a standard low-power microscope sensor and camera. The retina consists of the posterior pole, the macula, and the optic disc [9]. A fundus camera captured Ocular fundus images imagining the theory that the Ocular surface illuminates and reflects off the separation. After the image acquisition process, the first stage of the proposed model is based on dividing the Ocular fundus image into his RGB color channels. These channels require additional processing time and are intended to reduce computation time. The best option is to convert the Ocular image to RGB channel illumination. The process of removing noise and uneven lighting is described in the next section [10].

Eliminates uneven lighting and noise by manipulating non-uniform illumination and removing noise from the image of the Ocular background, more Ocular vessels become visible. To solve this problem, we used image processing tactics. The first step is to convert the RGB image to an inverted RGB image. Then, apply morphological operations to handle background non-uniformity. Use top and bottom morphology tactics to get a better view of the vessels. Both tactics give the following.

Conversion of Grey-scale image

Detailed images are observed from grayscale images, especially in medical images. Medical images are very important for feature analysis. Observing Ocular images is very important to indicate the development of ocular disease. Now that I’ve dealt with the uneven lighting problem, my next major task is to combine the RGB images into one grayscale image of hers. This is necessary because the change in contrast for each channel is different. Acquire good grayscale images using a novel principal component analysis (PEC) technique. The PCA technique is based on transforming the intensity magnitude rotation of the color space to the orthogonal axis, resulting in a high-contrast grayscale image. The rendering of retina RGB channels to grayscale conversion is well defined. PCA provided highly differentiated images of blood vessels compared to the background. Histogram analysis of PCA can be analyzed to show more distribution and higher levels of intensity compared to morphological tactical imagery.

Coherence of the ocular vessels

After acquiring the grayscale image, the Ocular vessels should be emphasized because the correctly observed large vessels cannot be analyzed compared to the small vessels. Analysis of small vessels can be correctly analyzed using directional diffusion filtering. This filtering technique is applied to detect low quality fingerprints first. The operation of directional diffusion filtering requires externally computed image orientation information, known as the orientation field (OF). This creates a diffusion tensor and orients it according to the direction of vascular flow. The main purpose of using anisotropic diffusion filtering is to generate optimal elliptical tilt angle data and correctly detect small vessels a representation of the anisotropic diffusion of an image.

Conclusion

This study includes an analysis of the effect of Ocular vascular coherence on segmentation. Previous methods for segmenting Ocular vessels were used to address the problem of slightly varying contrast and noise, but these techniques were ineffective in increasing the sensitivity of small vessel detection. , small vessel detection requires good coherence of Ocular vessel segmentation. The ability to correctly identify Ocular vessels will give medical professionals an advantage in analyzing disease progression and recommending appropriate treatments. In this study, the proposed influence on Ocular vessel coherence (preprocessing step) and segmentation module (postprocessing step) yielded promising results for small vessel segmentation. The described method works well and is comparable to existing methods for the STARE and DRIVE datasets. We compared the performance of the proposed method with conventional methods and methods based on deep learning. We achieved a sensitivity of 0.821 for DRIVE, 0.811 for STARE, a specificity of 0.962 for DRIVE, 0.959 for STARE, an accuracy of 0.961 for DRIVE, an accuracy of 0.954 for STARE, an AUC of 0.967 for DRIVE, and an AUC of 0.966 for STARE. The proposed method outperforms conventional deep learning methods. The proposed method requires less computation time than existing methods. I still have many ideas to improve for future work. Implement a robust CNN model and coherence engine as preprocessing to improve performance. Another future improvement is work on database and synthetic image generation to improve the training process for obtaining well-segmented images.

References

- Dora, Veronica Della. Infrasecular geographies: Making, unmaking and remaking sacred space. Prog Hum Geogr. 42, 44-71 (2018).

- Dwyer, Claire. ‘Highway to Heaven’: the creation of a multicultural, religious landscape in suburban Richmond, British Columbia. Soc Cult Geogr. 17, 667-693 (2016).

- Fonseca, Frederico Torres. Using ontologies for geographic information integration. Transactions in GIS. 6, 231-257 (2009).

- Harrison, Paul. How shall I say it…? Relating the nonrelational .Environ Plan A. 39, 590-608 (2007).

- Imrie, Rob. Industrial change and local economic fragmentation: The case of Stoke-on-Trent. Geoforum. 22, 433-453 (1991).

- Jackson, Peter. The multiple ontologies of freshness in the UK and Portuguese agri‐food sectors. Trans Inst Br Geogr. 44, 79-93 (2019).

- Tetila EC, Machado BB. Detection and classification of soybean pests using deep learning with UAV images. Comput Electron Agric. 179, 105836 (2020).

- Kamilaris A, Prenafeata-Boldú F. Deep learning in agriculture: A survey. Comput Electron Agric.147, 70-90 (2018).

- Mamdouh N, Khattab A. YOLO-based deep learning framework for olive fruit fly detection and counting. IEEE Access. 9, 84252-8426 (2021).

- Brunelli D, Polonelli T, Benini L. Ultra-low energy pest detection for smart agriculture. IEEE Sens J. 1-4 (2020).

Indexed at, Google Scholar, Crossref

Indexed at, Google Scholar, Crossref

Indexed at, Google Scholar, Crossref

Indexed at, Google Scholar, Crossref

Indexed at, Google Scholar, Crossref

Indexed at, Google Scholar, Crossref